Targeted weed-control technology, commonly referred to as “see and spray,” enables ground-based equipment to automatically apply herbicide directly to the target weed, leading to significant herbicide savings (11). This is made possible through deep convolutional neural networks (DCNNs), which facilitate real-time weed recognition via computer vision. While successfully adopted in various agricultural settings, this technology has yet to be implemented in turfgrass. Its adoption is limited by the lack of turfgrass-specific weed-recognition models and the complex development processes involved (7, 14).

In settings where targeted applications have been successfully applied, the You Only Look Once (YOLO) algorithm has become one of the standards for weed recognition due to its strong performance and sensor compatibility (1). Research on YOLO’s use in turfgrass is limited (10, 11, 13), while weed recognition is further complicated by factors such as spatial variations in weed phenotypes, biotypes and growth stages; the morphological resemblance between weeds and turf; and the lack of three-dimensionality in mowed conditions (3, 7, 11). YOLO has proven effective in identifying weeds with significant contrast against the background, such as differences in texture or color (10, 13), but recognizing weeds that blend into the canopy or have small parts remains a challenge (11). Earlier YOLO versions relied on object detection using bounding boxes, which can include background features for complex-shaped targets, leading to confusion and underperformance in low-contrast backgrounds like turfgrass (5). Exploring alternative annotation methods is crucial to maximize YOLO’s potential. Newer YOLO models incorporate segmentation, which may improve precision by analyzing object shapes more accurately.

Building an effective weed-recognition model is a multistep process, with generating large, high-quality datasets depicting targets across diverse environmental conditions and contexts being a critical step. This requires acquiring and manually labeling (i.e., annotating) images, which is time- and labor-intensive and demands human expertise (3, 7). Methods such as semi-supervised learning (SSL), where pretrained models help accelerate the labeling process, become more common (16). However, research on using SSL to accelerate image annotation for weed recognition in turfgrass with object detection or segmentation is lacking.

Therefore, this study was conducted to address the above-identified needs. The individual objectives were: 1) to compare the performance of a turfgrass-specific YOLOv8 segmentation model against object detection in broadleaf weed recognition, utilizing highly restricted datasets, and 2) to compare a single-step training procedure that utilizes manual annotation only against a two-step procedure incorporating automated annotation using a pretrained model to expedite the development process. The research confirms that segmentation may effectively identify weeds with intricate shapes intertwined in turf canopies, while semi-supervised learning accelerates model development.

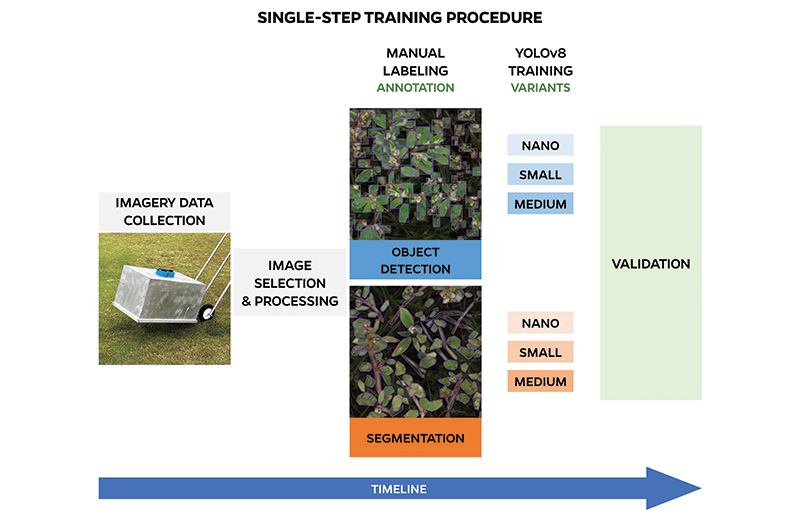

Figure 1. Workflow diagram illustrating the single-step development process for spotted spurge [Chamaesyce maculata (L.) Small] recognition models.

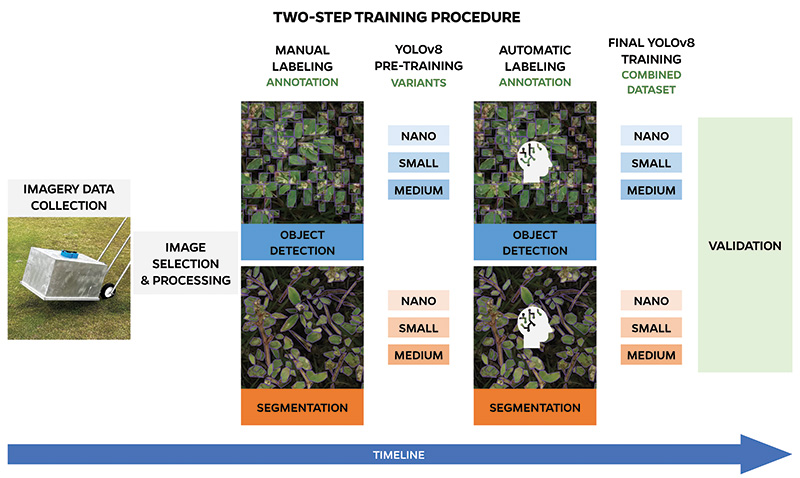

Figure 2. Workflow diagram illustrating the two-step development process for spotted spurge [Chamaesyce maculata (L.) Small] recognition models.

Materials and methods

Data acquisition and preparation

In July 2023, images of weed infestations in a Latitude 36 bermudagrass golf course fairway were collected from the University of Florida/Institute of Food and Agricultural Sciences (UF/IFAS) Plant Science Research and Education Unit in Citra, Fla., using a mirrorless camera (Sony ILCE-6400, Sony Electronics Inc, Tokyo) mounted at a fixed height of 16.93 inches (43 centimeters) on top of a lightbox (TPR Systems Inc., Milton, Fla.), providing a uniform lighting. Target was spotted spurge, a weed increasingly difficult to control due to herbicide resistance and limited options (9), with growth habit posing challenges for recognition models (11). Each image, covering an area 19.69 inches × 11.02 inches (50 centimeters × 28 centimeters), was divided into segments of 530 × 300 pixels each, without loss of resolution, using a custom script in Python to enhance computational efficiency.

Data annotation

Spotted spurge plants were annotated in 1,500 images using Roboflow, appropriately for segmentation and object-detection tasks. Labeling for segmentation involved manually outlining individual plant parts and assigning classes to each instance. Object detection used rectangular bounding boxes around the outer margins of plant parts and assigning a class to the entire area enclosed by an individual box. Ultimately, the dataset was randomly split into two subsets of 1,200 images for model development and 300 for validation.

Model training

All models developed in this research were trained and validated with HiPerGator 3.0 (University of Florida) using 10 central processing unit cores (i.e., CPU), 32 gigabytes of memory and 1 core graphic processing unit (i.e., GPU) using the procedures described below.

Single-step training procedure

The study utilized YOLOv8, the latest model version at the time, to train separate object detection and segmentation models using nano, small and medium variants (Figure 1). All variants were trained on the same dataset with appropriate labeling and default hyperparameters, including 100 epochs, a batch size of 16 images and a resolution of 544 pixels. While hyperparameter fine-tuning can optimize performance, this research focused on comparing weed-recognition models under limited training data, not developing a market-ready product.

Two-step training procedure

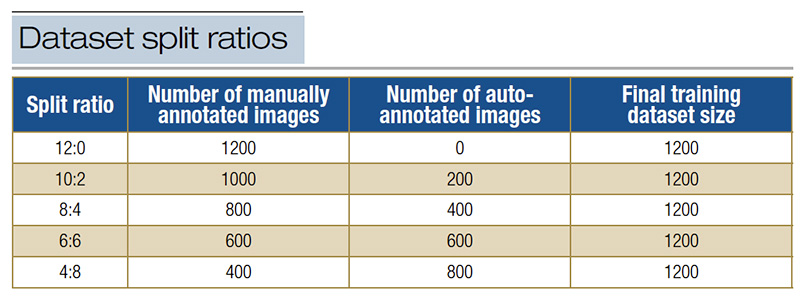

The two-step training procedure (Figure 2) included pretraining followed by final training. In pretraining, models were trained as in single-step training but with varying image quantities based on five split ratios (Table 1). In the second phase, the initial model from Phase One automatically annotated the remaining dataset, and these annotations were combined with manual labels to form a 1,200-image dataset for retraining. YOLOv8 models (nano, small, medium) were trained for both object detection and segmentation using the same configurations as single-step training.

Table 1. Spotted spurge [Chamaesyce maculata (L.) Small] recognition training dataset split ratios between manually and automatically annotated images for two-step training procedure. The dataset split ratio is represented in the form of A:B, where A denotes the number of manually annotated images used for the model pretraining, and B represents the automatically labeled images generated by the pretrained model. Both A and B were combined in the final training phase.

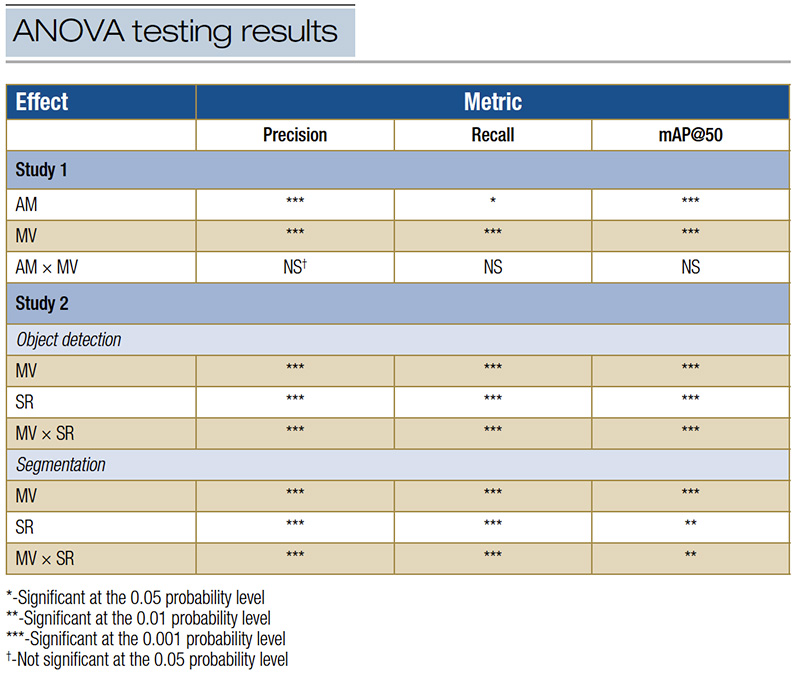

Table 2. Results of ANOVA testing for the effects of annotation method (AM), model variant (MV) and split ratio (SR), and their interactions on precision, recall and mean average precision calculated at an intersection over union threshold of 0.50 (mAP@50) metrics achieved by You Only Look Once 8 (YOLOv8) object detection and segmentation models trained using single-step procedure using manually labeled images only (Study 1) or two-step procedure incorporating automated annotation using a pre-trained model (Study 2) for the recognition of spotted spurge [Chamaesyce maculata (L.) Small] infestation in Latitude 36 bermudagrass [Cynodon dactylon (L.) Pers. × C. transvaalensis Burtt-Davy] maintained as a golf course fairway. Main effects and interactions that were significant for at least one measured parameter are presented.

Evaluation metrics

Model performance in spotted spurge recognition was evaluated using 300 validation images, divided into three 100-image folds. Intersection over union (IoU) quantified overlap between predicted and actual annotations to classify true positives (TP), false positives (FP), true negatives (TN) and false negatives (FN). These metrics were used to calculate precision (Equation 1), recall (Equation 2) and mean average precision (mAP) at IoU ≥ 0.50 (mAP@50, Equation 3), the final performance metric (4). All metrics ranged from 0.0 to 1.0, with a threshold of 0.50 defining success (11).

Equation 1

Precision = TP×(TP+FP)-1

Equation 2

Recall = TP×(TP+FN)-1

Equation 3

mAP@0.5 = Precision(Recall)(infinitesimal change in Recall)

Statistical analysis

An analysis of variance (ANOVA) was performed with Fisher’s protected LSD test (P ≤ 0.05) for mean comparisons. The multifactorial design treated each validation fold as a replicate. In the first study, YOLOv8 model variants and annotation approaches were fixed effects. For the second objective, analyses were conducted separately for each annotation technique, with model variants and dataset ratios as fixed effects. Statistical analyses were performed in RStudio.

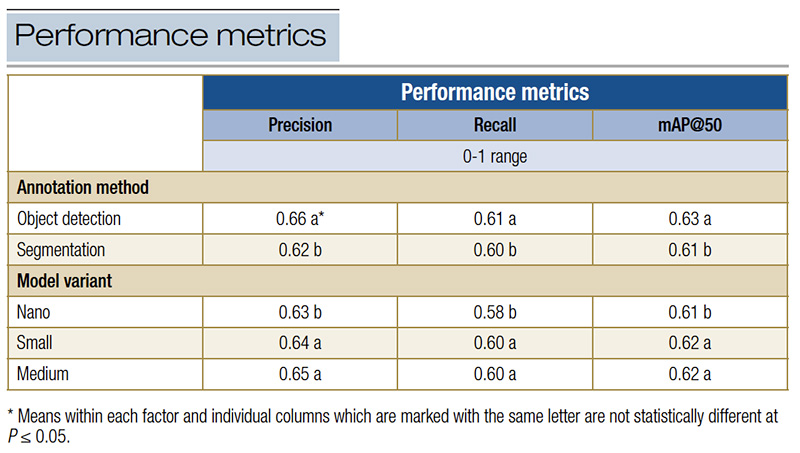

Table 3. Performance metrics of precision, recall and mean average precision calculated at an intersection over union threshold of 0.50 (mAP@50) achieved by You Only Look Once 8 (YOLOv8) object detection and segmentation variants trained over 100 epochs using single-step procedure with manually annotated images only for the recognition of spotted spurge [Chamaesyce maculata (L.) Small] infestation in Latitude 36 bermudagrass [Cynodon dactylon (L.) Pers. × C. transvaalensis Burtt-Davy] maintained as a golf course fairway.

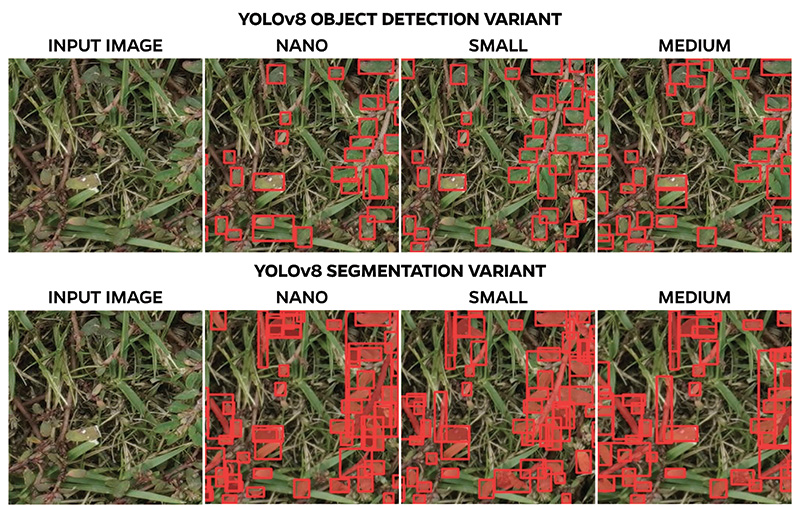

Figure 4. Visual representation of YOLOv8 segmentation and object detection variants performance in recognizing spotted spurge [Chamaesyce maculata (L.) Small] in Latitude 36 bermudagrass [Cynodon dactylon (L.) Pers. × C. transvaalensis Burtt-Davy] maintained as a golf course fairway.

Results and discussion

Comparison of segmentation and object detection performance using one-step training procedure

The precision, recall and mAP@50 were all influenced by the effects of annotation method and model variant with no interactions (Table 2). All tested YOLOv8 models and variants achieved adequate recognition of spotted spurge, with all performance metrics ≥0.60, surpassing the >0.50 threshold (Table 3, Figures 3 and 4). Moreover, object detection consistently outperformed segmentation in both metrics across annotation methods, despite difference being minimal. Nano models showed lower precision and recall compared to other variants, and recall lagged behind precision across all models (Table 3).

While previous research demonstrated segmentation’s potential for recognition of distinct clumps or mats of weeds on contrasting backgrounds of straw-colored turfgrass (6), our research presents the first application of YOLO-based segmentation to identify intricate weed structures blended into actively growing turfgrass. Spotted spurge lateral growth allows it to creep into thinner parts of the turf canopy, forming irregular patches (11). Targeted spraying aims to treat detected weeds (15), but irregular weed shapes, like those of spotted spurge, can expand the spray zone, wasting herbicide and reducing precision. Segmentation-based detection mitigates this by focusing on specific plant parts, reducing nontarget misapplication. This enhances system accuracy, improves herbicide efficiency and reduces the environmental footprint of weed control (11, 12).

Contrary to initial expectations, object detection outperformed segmentation in this study. Although the difference appeared marginal, it was statistically significant (Table 3). This contrasts with literature findings reporting significant improvements when switching from object detection to segmentation (15). In our study, we followed the method of creating tight bounding boxes around individual parts of the spotted spurge rather than the entire plant (12). The small foliage size led to bounding boxes resembling segmentation masks (Figure 3), but they were simpler, less time-consuming and less error-prone, which may explain the slightly better object-detection performance. Considering individual YOLOv8 variants, the nano variant showed slightly lower performance than the small and medium variants, with a statistically significant difference (Table 3). This contrasts with previous research where no significant differences among YOLO variants were found (13). Despite these differences, subjectively, the overall performance of all models was generally deemed close.

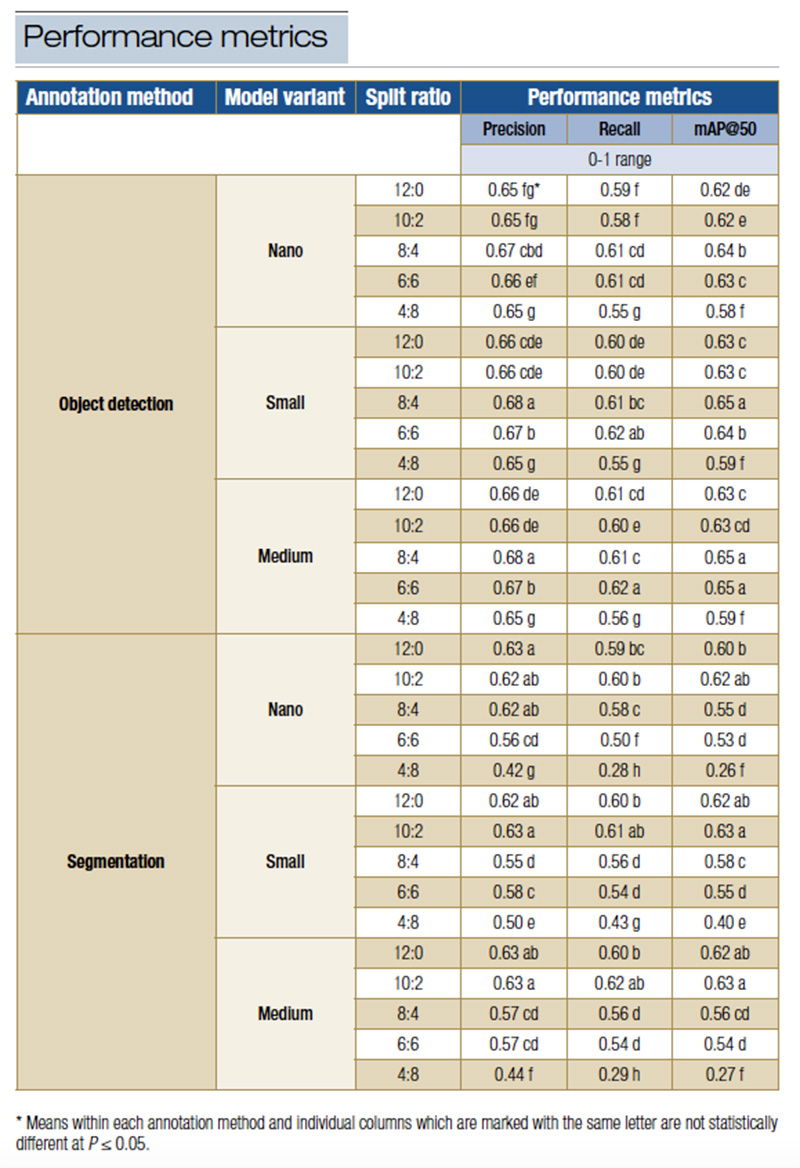

Table 4. Performance metrics of precision, recall and mean average precision calculated at an intersection over union threshold of 0.50 (mAP@50) achieved by various You Only Look Once 8 (YOLOv8) object detection and segmentation variants trained over 100 epochs using two-step procedure incorporating automated annotation using a pretrained model for the recognition of spotted spurge [Chamaesyce maculata (L.) Small] infestation in Latitude 36 bermudagrass [Cynodon dactylon (L.) Pers. × C. transvaalensis Burtt-Davy] maintained as a golf course fairway. The dataset split ratio is represented in the form of A:B, where A denotes the number of manually annotated images used for the model pretraining, and B represents the automatically labeled images generated by the pretrained model. Both A and B were combined in the final training phase.

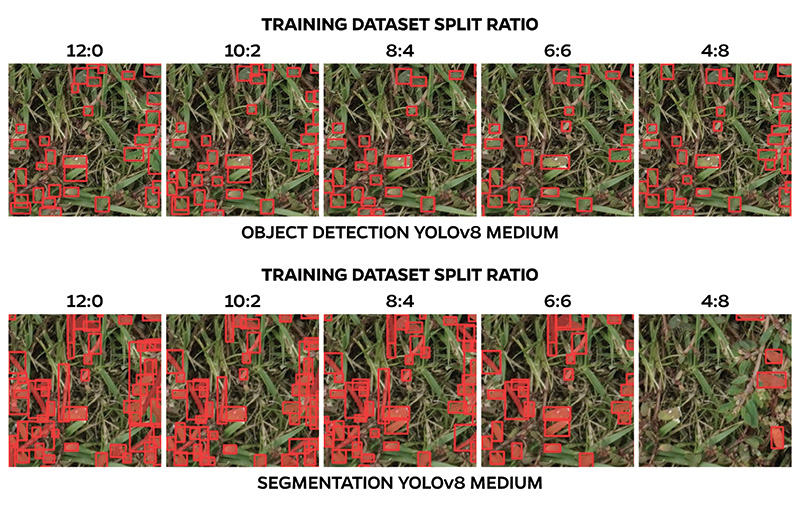

Figure 5. Visual representation of YOLOv8 segmentation and object detection variants performance in recognizing spotted spurge [Chamaesyce maculata (L.) Small] in Latitude 36 bermudagrass [Cynodon dactylon (L.) Pers. × C. transvaalensis Burtt-Davy] maintained as a golf course fairway. The dataset split ratio is represented in the form of A:B, where A denotes the proportion of manually annotated images used for the model pretraining, and B represents the proportion of automatically labeled images generated by the pretrained model. Both A and B were combined in the final training phase.

All YOLOv8 models in this study were trained with a constraint amount of 1,200 images, achieving adequate spotted spurge recognition (all metrics >0.60), consistent with previous research (11). However, all models’ performance fell short of achieving perfect or even good fit (all metrics <0.80) (Table 3). The primary limitation is likely due to insufficient representation of real-life variability in spotted spurge morphology and environmental contexts, compounded by small dataset size. However, further research is needed to explore the relationship between data size and adequate target weed representation.

While the study highlights the potential of segmentation and object detection for identifying complex weed structures in turf, it also highlights areas needing improvement to enable effective development of commercially viable models. Disparities in performance likely stem also from dataset imperfections, with labeling inaccuracies arising from similarities (either in shape or color) between the target and turf. This led to some background being mistakenly included in annotations, as seen in the lower recall and higher precision values (Table 3). Tight bounding boxes around small spotted spurge foliage, rather than encapsulating entire irregular-shaped plant patch, may improve performance (12). Segmentation, which isolates the target more precisely from the background, could be more suitable for turfgrass scenarios, despite its slightly lower performance in this study. Overall, manual annotations, while essential, are labor-intensive and prone to human error, highlighting the need for procedural enhancements to ensure successful integration of this technology into turfgrass settings.

Evaluation of two-step procedure for accelerated annotation and model development

All evaluated quantifiers (i.e., precision, recall and mAP@50) were impacted by the interaction of model variant and training dataset split ratio between human- and auto-labeled images (Table 2). Given the significant difference between annotation approaches evidenced through the previous objective (Table 3), both methods were analyzed separately in this experiment.

For object detection (Table 4), the 8:4 split yielded the best overall performance, followed by 6:6, both surpassing the fully manually labeled data (12:0), while the 4:8 split consistently reduced all performance metrics. Nano variants consistently underperformed compared to the small and medium variants across all split ratios (Table 4, Figure 5). Segmentation models exhibited different trends across split ratios compared to object detection (Table 4, Figure 5). The 10:2 split performed similarly to fully human-annotated data (12:0), but overall performance declined as the proportion of auto-labeled images increased, starting at 8:4. The 4:8 split failed to achieve adequate recognition of spotted spurge across all variants. Similar to object detection, the small and medium models generally outperformed the nano variant, particularly at higher-performing split ratios (Table 4, Figure 5).

Limited previous turfgrass-specific work on SSL approaches focused on less-complex image classification networks (2, 8). This experiment determined whether a two-step SSL training process could expedite the model development or improve performance in more complex object detection and segmentation algorithms. It is the first report of employing SSL in turfgrass for these advanced models, with results showing both benefits. Object-detection performance remained stable across varying human-to-auto-labeled image ratios until the split was reversed, with improved mAP@50 at 8:4 and 6:6 split (Table 4). Thus, the 6:6 split not only doubled the amount of annotated data but also improved overall model performance. In contrast, segmentation models deteriorated with splits ≥8:4, with the 10:2 ratio being the cutoff point. Recall was the most affected metric, particularly in the segmentation, indicating greater sensitivity to inconsistencies and transfer bias in auto-annotation. Despite accurate spurge recognition, the segmentation model struggled to identify all instances in the validation set. These findings align with previous studies showing that lower ratios of human-labeled data may reduce model performance (8, 17). Additionally, among the YOLO variants, the models equipped with more complex architecture, medium and small, resulted in overall better performance compared to the nano variant, highlighting the importance of model size for optimal results. Fine-tuning smaller models may yield comparable performance to larger models; however, this requires further verification. Smaller variants also offer advantages in field implementation due to their lower computational demands.

The research says

- As previously reported for object detection (11), this study confirms that segmentation effectively identifies weeds with intricate shapes intertwined in turf canopies.

- Though the difference was subtle, object detection outperformed segmentation, likely due to (a) spotted spurge’s small foliage enabling tight bounding boxes resembling segmentation masks, (b) the complexity and error-prone nature of delineating exact target shapes and (c) segmentation’s increased sensitivity to annotation errors, particularly with automatically labeled images.

- The proposed two-step SSL-based strategy effectively accelerates the time- and labor-intensive annotation process, particularly for object detection models, without compromising performance.

- Segmentation may be more suitable for certain turfgrass-specific situations, offering more accurate target separation, despite its slightly inferior performance in this case.

- Among the three YOLOv8 model modifications tested, the small and medium variants achieved the most reliable performance under investigated configurations.

Literature cited

- Badgujar, C.M., A. Poulose and H. Gan. 2024. Agricultural object detection with You Only Look Once (YOLO) algorithm: A bibliometric and systematic literature review. arXiv preprint arXiv:2401.10379 (https://doi.org/10.48550/arXiv.2401.10379).

- Chen, X., T. Liu, K. Han, X. Jin and J. Yu. 2024. Semi-supervised learning for detection of sedges in sod farms. Crop Protection 106626. (https://doi.org/10.1016/j.cropro.2024.106626).

- Coleman, G.R.Y., M.V. Bagavathiannan, A. Bender, N.S. Boyd, K. Hu, A.W. Schumann, S.M. Sharpe, M.J. Walsh and Z. Wang. 2022. Weed detection to weed recognition: Reviewing 50 years of research to identify constraints and opportunities for large-scale cropping systems. Weed Technology 36(6):741-757 (https://doi.org/10.1017/wet.2022.84).

- Furlanetto, R.H., A. Schumann and N. Boyd. 2024. A mobile application to identify poison ivy (Toxicodendron radicans) plants in real time using convolutional neural network. Multimedia Tools and Applications 83:60419-60441 (https://doi.org/10.1007/s11042-023-17920-3).

- Hurtik, P., V. Molek, J. Hula, M. Vajgl, P. Vlasanek and T. Nejezchleba. 2022. Poly-YOLO: Higher speed, more precise detection and instance segmentation for YOLOv3. Neural Computing and Applications 34(10):8275-8290 (https://doi.org/10.1007/s00521-021-05978-9).

- Jiang, K., U. Afzaal and J. Lee. 2022. Transformer-based weed segmentation for grass management. Sensors 23(1):65 (https://doi.org/10.3390/s23010065).

- Jin, X., T. Liu, Y. Chen and J. Yu. 2022. Deep learning-based weed detection in turf: A review. Agronomy 12(12):3051 (https://doi.org/10.3390/agronomy12123051).

- Liu, T., D. Zhai, F. He and J. Yu. 2024. Semi-supervised learning methods for weed detection in turf. Pest Management Science 80(6):2552-2562 (https://doi.org/10.1002/ps.7959).

- McCullough, P.E, J.S. McElroy, J. Yu, H. Zhang, T.B. Miller, S. Chen, C.R. Johnston and M.A. Czarnota. 2016. ALS-resistant spotted spurge (Chamaesyce maculata) confirmed in Georgia. Weed Science 64(2):216-222.

- Medrano, R. 2021. Feasibility of real-time weed detection in turfgrass on an edge device. California State University. (http://hdl.handle.net/20.500.12680/3b591f88j).

- Petelewicz, P., Q. Zhou, M. Schiavon, G.E. MacDonald, A.W. Schumann and N.S. Boyd. 2024. Simulation-based nozzle density optimization for maximized efficacy of a machine vision-based weed control system for applications in turfgrass settings. Weed Technology 38, e25 (https://doi.org/10.1017/wet.2024.7).

- Sharpe, S.M., A.W. Schumann and N.S. Boyd. 2020. Goosegrass detection in strawberry and tomato using a convolutional neural network. Scientific Reports 10(1):9548 (https://doi.org/10.1038/s41598-020-66505-9).

- Sportelli, M., O.E. Apolo-Apolo, M. Fontanelli, C. Frasconi, M. Raffaelli, A. Peruzzi and M. Perez-Ruiz. 2023. Evaluation of YOLO object detectors for weed detection in different turfgrass scenarios. Applied Sciences 13(14):8502 (https://doi.org/10.3390/app13148502).

- Vijayakumar, V., Y. Ampatzidis, J.K. Schueller and T. Burks. 2023. Smart spraying technologies for precision weed management: A review. Smart Agricultural Technology 6:100337 (https://doi.org/10.1016/j.atech.2023.100337).

- Yang, J., Y. Chen and J. Yu. 2024. Convolutional neural network based on the fusion of image classification and segmentation module for weed detection in alfalfa. Pest Management Science 80(6):2751-2760 (https://doi.org/10.1002/ps.7979).

- Zhao, Z., L. Alzubaidi, J. Zhang, Y. Duan and Y. Gu. 2023. A comparison review of transfer learning and self-supervised learning: Definitions, applications, advantages and limitations. Expert Systems with Applications 122807. (https://doi.org/10.1016/j.eswa.2023.122807).

- Zhou, S., S. Tian, L. Yu, W. Wu, D. Zhang, Z. Peng and Z. Zhou. 2024. Growth threshold for pseudo labeling and pseudo label dropout for semi-supervised medical image classification. Engineering Applications of Artificial Intelligence 130:107777 (https://doi.org/10.1016/j.engappai.2023.107777).

Mikerly M. Joseph is a graduate assistant and Katarzyna A. Gawron is a biological scientist, both in Pawel Petelewicz’s lab in the Agronomy Department at the University of Florida (UF/IFAS), Gainesville; Chang Zhao is an assistant professor of ecosystem services and artificial intelligence and Gregory E. MacDonald is a professor of weed science, both in the UF/IFAS Agronomy Department, Gainesville; Arnold W. Schumann is a professor in the Department of Soil, Water and Ecosystem Sciences at the UF/IFAS Citrus Research and Education Center, Lake Alfred; Nathan S. Boyd is a professor of weed and horticulture sciences in the Department of Horticulture and an associate center director at the UF/IFAS Gulf Coast Research and Education Center, Wimauma; and Paweł Petelewicz (petelewicz.pawel@ufl.edu) is an assistant professor of turfgrass weed science in the UF/IFAS Agronomy Department, Gainesville.